Prolific: Setting standards for authentic human data collection

Discover how Prolific's upgraded data quality system, Protocol, sets the industry standard for authentic human data collection through five comprehensive layers of quality assurance. Learn why our uncompromising focus on data integrity continues to deliver genuine human insights in an age where AI contamination threatens research validity.

Watch our 90-second summary video for a high-level snapshot, or read on for more detail about how each layer works to deliver high quality data.

The crisis of trust in online research & data collection

We're living through a fundamental shift. As AI becomes increasingly sophisticated and ubiquitous, the line between human and machine responses is blurring in ways that threaten the foundation of research and human data collection.

The stakes are high. Researchers are losing confidence in their data. Companies are questioning the insights driving decisions. AI-generated responses increasingly contaminate the data we rely on to be human.

Worse yet, it’s difficult to know how much it’s affecting your data. AI-generated responses are often convincing, sounding just like what someone would say.

There's nothing wrong with synthetic data when it's labeled as such. AI-generated insights have their place and can be incredibly valuable for certain research applications. The problem is deception.

When you commission human data, you deserve human data

When you're asking how people experience something, you need genuine human perspectives, not AI interpretations of what humans might think. Contaminated data means failed projects, invalid conclusions, and wasted resources. Undetected AI responses can compromise months of work and thousands in funding.

That’s what we’re protecting at Prolific: access to genuine, human insights, when you need it. So you can run breakthrough research, build the next popular LLM, ship brilliant new products, and more.

The Prolific promise: authenticity above all else

At Prolific, we’ve made an unwavering commitment: to focus on data integrity above all else.

Data integrity has been our founding principle since 2014, long before AI contamination became an industry crisis. While other companies chase scale or speed at any cost, we've always known that nothing matters more than data integrity. That’s why we’re committed to protecting authentic responses from real humans when you need them.

Independent research consistently validates Prolific as setting the standard for data quality in online platforms (2017-2025). Only 0.5% of Prolific participant responses were rejected in 2025. And our September internal data quality audit shows that just 0.99% of participants were flagged for AI-generated responses.

Today, we’re introducing the latest upgrade of Protocol, our increasingly powerful, state-of-the-art system that sets industry standards for top-quality, authentic human insights.

Introducing Protocol: Our multi-layered defense system

We architect authentic data through Protocol, our comprehensive multi-layered system that ensures the data you receive is genuinely human and of the highest quality. It also creates a premium environment where authentic participants can thrive and earn fairly.

Think of Protocol like airport security for your research: multiple checkpoints, continuous monitoring, and sophisticated detection, all working together to maintain the highest standards of data integrity.

Layer 1: Who gets to become a participant

Ensuring quality over quantity for our pool.

While competitors often focus on maximising pool size and speed first, offering millions of less well-checked participants, Prolific is selective about who to let in. Several years ago, we realized that having a waitlist to get into our pool was an advantage to our data quality. Inviting people thoughtfully based on demand maintains strong demographic representation in the pool. And it acts as a natural barrier to fraud.

Newer participants carry more risk, since we have less insight about how they will behave. So, limiting that risk is beneficial for data quality. Going slowly also helps us keep verified participants happy, balancing supply and demand to ensure the best experience for everyone.

Maintaining a happy, healthy core participant pool is key to Prolific’s data quality. Participants are motivated to do well and keep coming back. It’s why you can achieve such good retention rates for longitudinal studies and tasks that require repeat contact with the same participants.

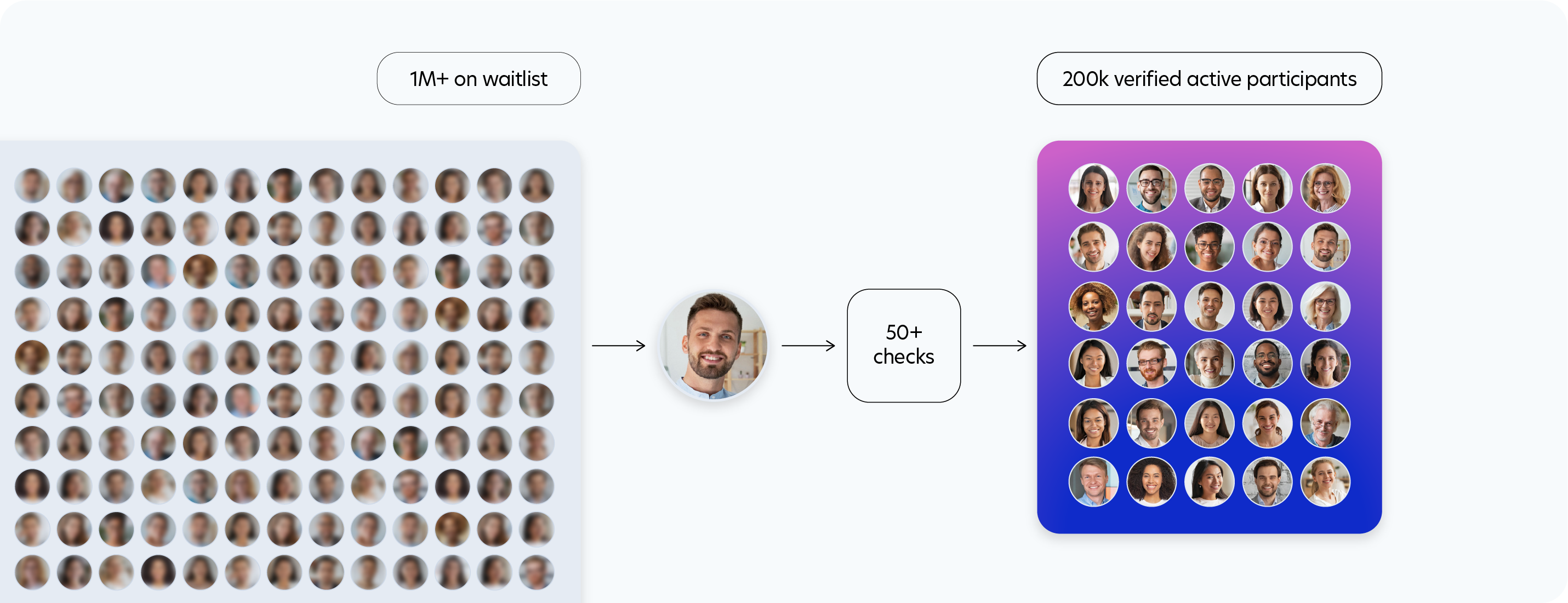

Only 13% of people are invited off the waitlist and have the chance to become a participant. Once invited, they must pass over 50 verification checks before they can begin taking studies.

Just as travelers need valid identification at the airport, every Prolific participant has had to pass a series of checks, such as:

- Bank-grade identity checks through Onfido

- IP address validation and deduplication

- Response quality assessments

Our onboarding survey filters out low-quality participants by detecting things like AI misuse, speeding, and low-effort answers—just a few of the behaviors that result in removal.

Only 55% of people invited off our waitlist pass onboarding and are verified to take studies. Ensuring high quality participants is also what enables us to offer industry-leading earnings to the genuine participants on our platform, benefitting participants and data collectors alike.

Layer 2: Pool-wide ongoing fraud detection

Ad hoc re-authentication and sophisticated fraud detection across our entire pool.

Airport security doesn't end at the first checkpoint, and neither does ours. While most platforms only verify participants at signup and never again, Prolific runs continuous checks throughout each participant’s journey to ensure quality is maintained.

Periodic identity re-confirmation checks, regular required profile updates, and our bi-monthly quality audit samples our pool to ensure standards don’t slip.

Not only do we run ongoing individual checks, but our system monitors behavior across the entire participant pool, detecting patterns of fraud or bot-like actions. If suspicious activity is flagged, our full-time team dedicated to data quality is on hand to respond quickly.

These checks also protect opportunities for genuine participants to take part, by removing unfair competition for studies and tasks.

Layer 3: In-study quality tools

Empowering customers with authenticity safeguards.

Although Prolific can’t see the responses collected in studies, we empower customers with extra tools and signals to determine response quality. While many platforms charge extra for add-ons like this, these all come included for Prolific users.

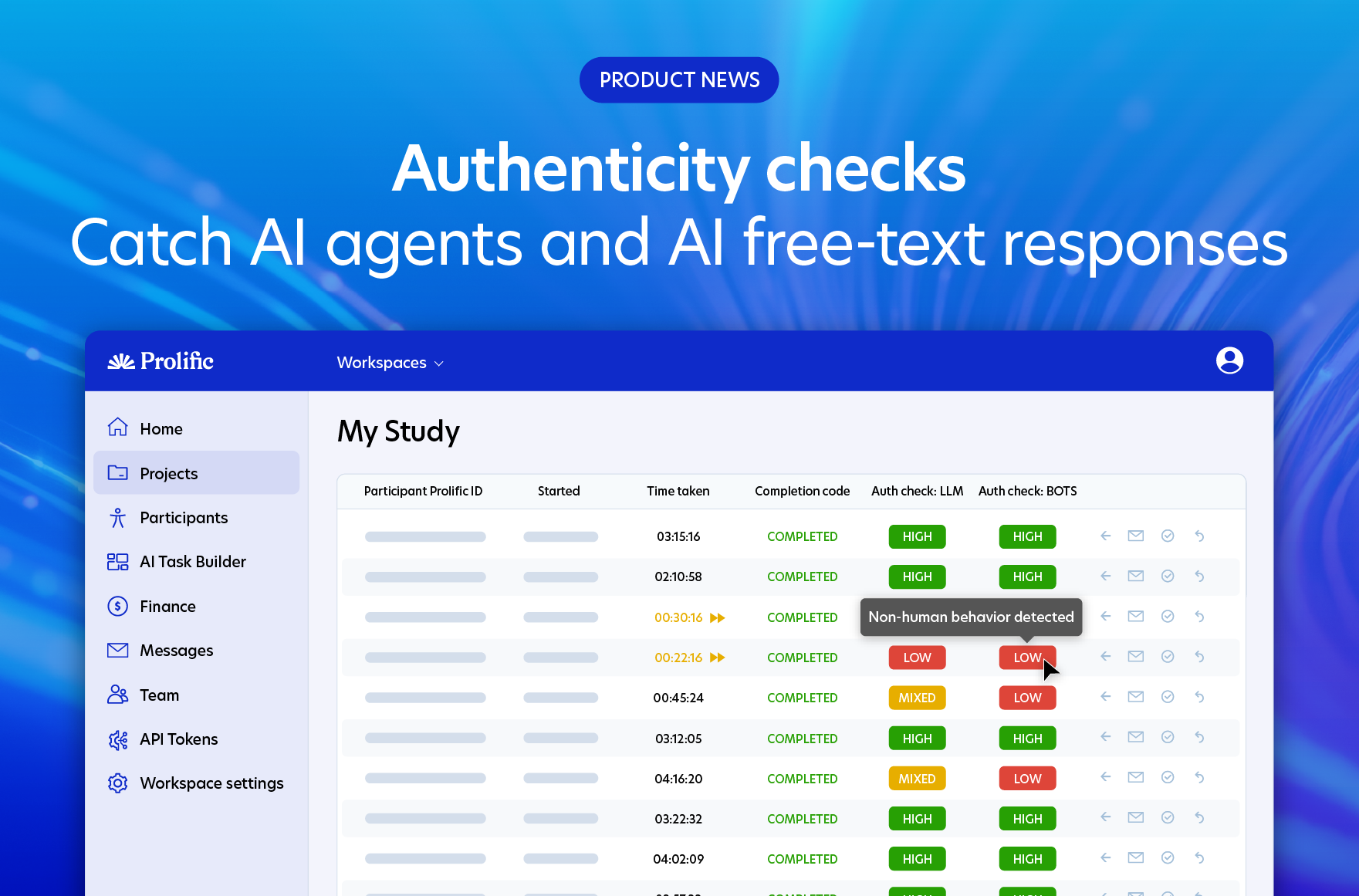

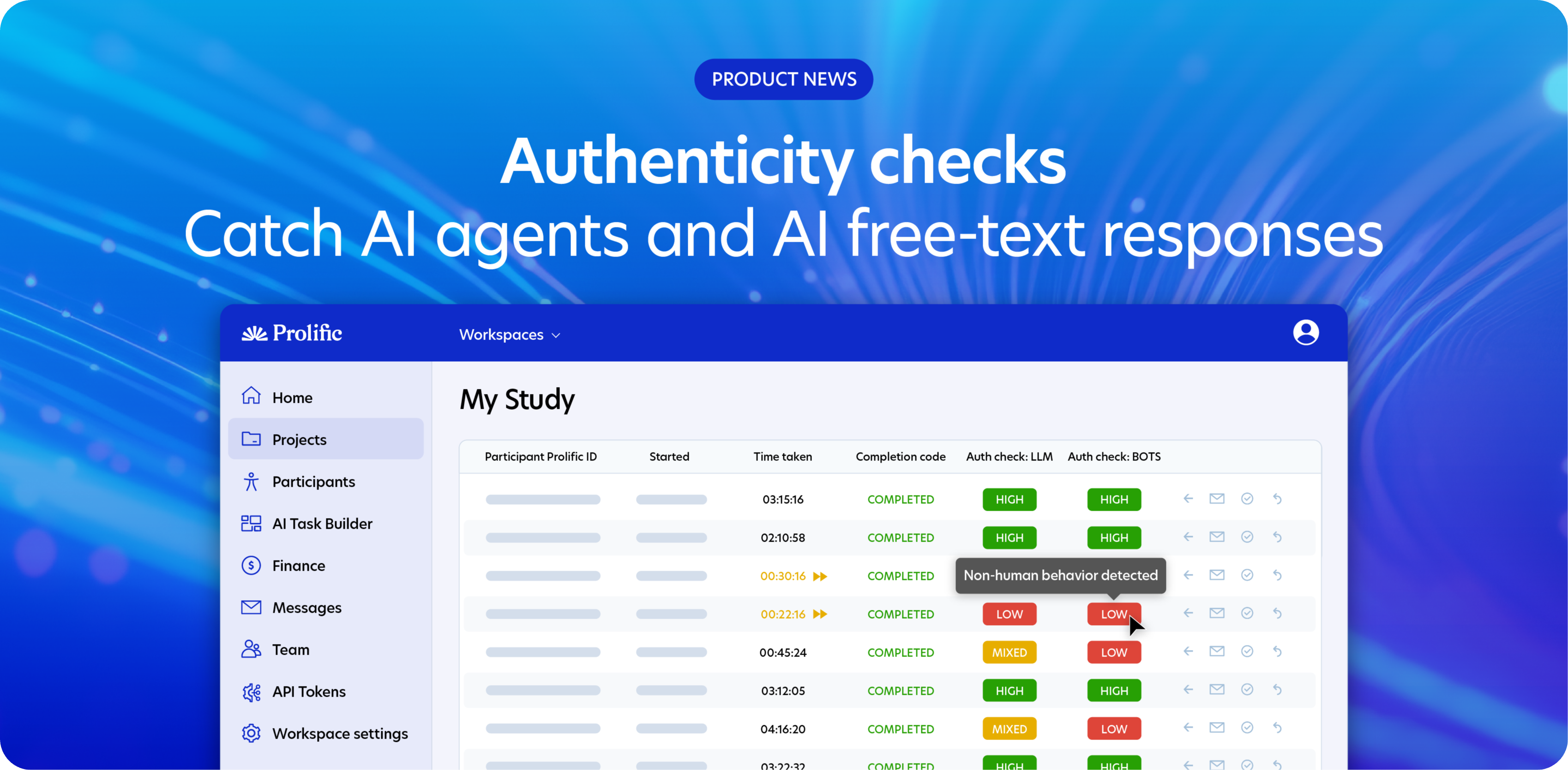

Unique to Prolific, Authenticity checks use Prolific’s proprietary machine learning model to flag two kinds of AI misuse, giving researchers confidence that results are authentically human. LLM authenticity checks detect when participants use AI tools like ChatGPT to answer free-text questions, with 98.7% precision. Bot authenticity checks catch when AI agents are answering your study with 100% accuracy in testing. Knowing the difference between AI-generated and real human responses means flagged responses can be rejected and replaced.

Similarly, we provide guidance to include in-study attention and comprehension checks, where participants can be rejected if they fail enough checks in a given study.

Prolific also monitors the time taken over tasks and whether it matches up with the researcher's expectations. “Exceptionally fast” submissions are automatically flagged and can be auto-rejected — a powerful feature that instantly replaces clearly low-integrity or bot responses with those of genuine, more thoughtful participants.

Layer 4: Performance-based quality controls

Championing the best participants and removing poor performers.

Every participant builds a dynamic quality record based on researcher feedback. Our system automatically tracks whether each participant's submission was approved or rejected, building a quality history for every individual.

Receiving the odd rejection is normal. We all have bad days, and each participant is only human! However, the number of rejections is always weighed up against the number of approved submissions. Participants who receive too many rejections are no longer allowed to take part in studies on Prolific.

Meanwhile, our best participants get priority access to studies. This creates a virtuous cycle: researchers get better data, quality participants are rewarded, and low-integrity actors are naturally excluded from the ecosystem.

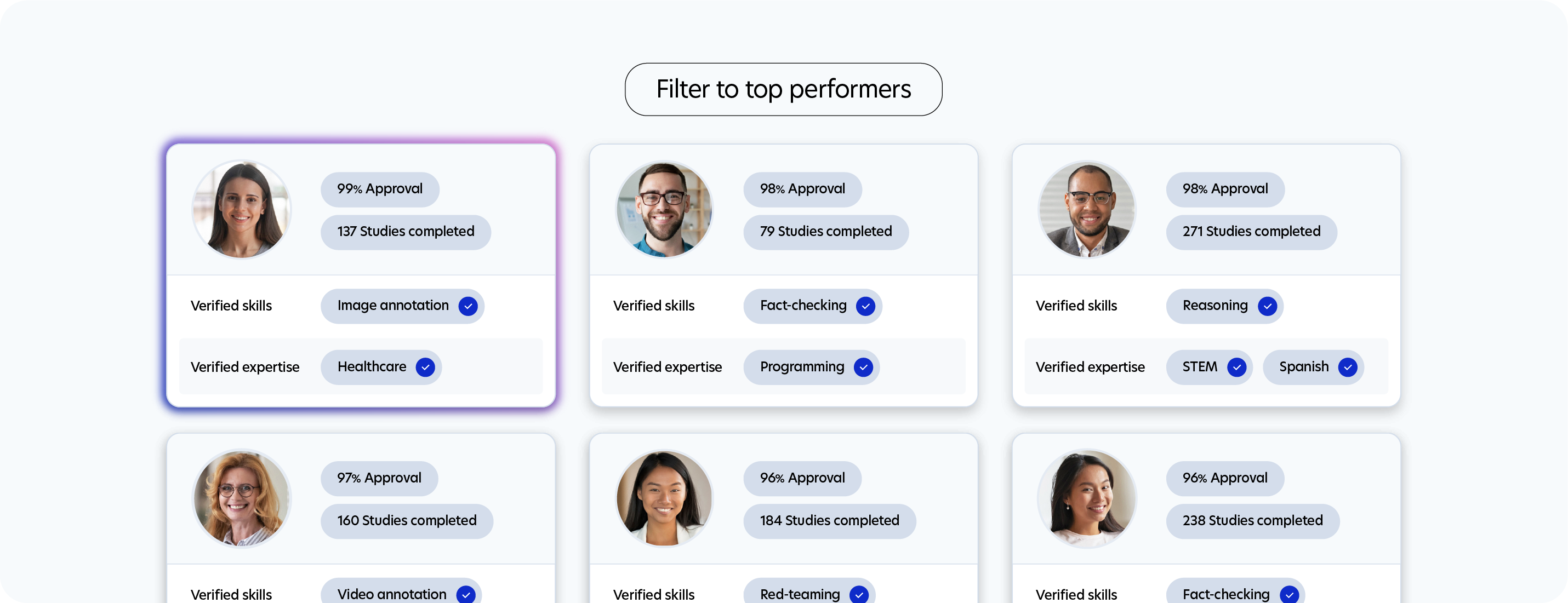

Prolific also equips researchers with free screeners that correspond to participant quality. For example, you can filter to participants who’ve taken 50+ studies on Prolific, with an approval rating of 100%.

For an extra premium, you can filter to participants who’ve completed our additional training and skill assessments that make them ideal for particular tasks. Select participants with verified skills for fact-checking, red-teaming, or writing, or find participants with confirmed qualifications, like healthcare or STEM professionals. Companies using our specialist participants have increased approval rates for AI training and evaluation tasks by up to 30%.

Layer 5: Maximizing participant motivation and performance

Retaining our best participants for ongoing data collection.

To deliver high-quality responses, it’s essential that we protect good participants as fiercely as we exclude bad ones. Not only does participant loyalty increase motivation to perform well, but it also makes more complex, ongoing data collection possible on Prolific.

Prolific sets the gold standard in this space.

Prolific was the first to require a fair minimum participant reward ($8.00 or £6.00 per hour), and still leads the way. Participants continuously report that it’s hard to earn on other platforms like you can on Prolific, contributing to high retention rates.

To start actively measuring participant wellbeing, we launched industry-leading psychometric wellbeing checks in 2024, setting new standards for ethical human data collection. Learn more in our wellbeing report.

Ongoing human oversight and finetuning of our data quality systems is also crucial in protecting good participants and maximizing engagement. Our support team handles appeals, overturns unfair decisions, and continuously refines our processes. Proactive spot checks of banned accounts help us overturn any unfair rejections and learn how to improve our rules. If a rule can be tweaked to become fairer to good participants while removing low-integrity participants, we’ll change it.

The result of all these measures is a highly engaged pool of exceptional participants. Our latest participant NPS score of 65% places us in the top quartile for technology, reflecting genuine loyalty for the platform. You can see this serious engagement firsthand in our active Reddit community. Many dedicated contributors have been with us since the beginning.

The advantage of Prolific: Human insight, authentically delivered

In an AI-driven world, genuine human perspectives are both precious and vulnerable.

Protocol ensures every response is authentically human through five uncompromising layers of protection. It does this by:

- Only letting in real, high-quality participants who pass over 50 identity and quality checks.

- Not stopping at upfront checks, but conducting ongoing pool-wide fraud detection to swiftly remove any bad actors.

- Ensuring researchers and data collectors are equipped with highly accurate in-study quality checks for AI use, attention, comprehension and speeding.

- Providing performance-based access to studies, where the best participants get priority and lower quality is removed.

- Maximising participant engagement and retention, through industry-leading policies and rewards.

This system benefits everyone. Data collectors get reliable data, and our valued participants enjoy a platform free from unfair competition and low-quality studies.

Don’t discover your data is contaminated too late. Work with a partner that refuses to compromise on what matters most: data integrity is the bottom line. Join Prolific today.