Beyond Technical Evals: Why Human-Centered AI Benchmarks Matter

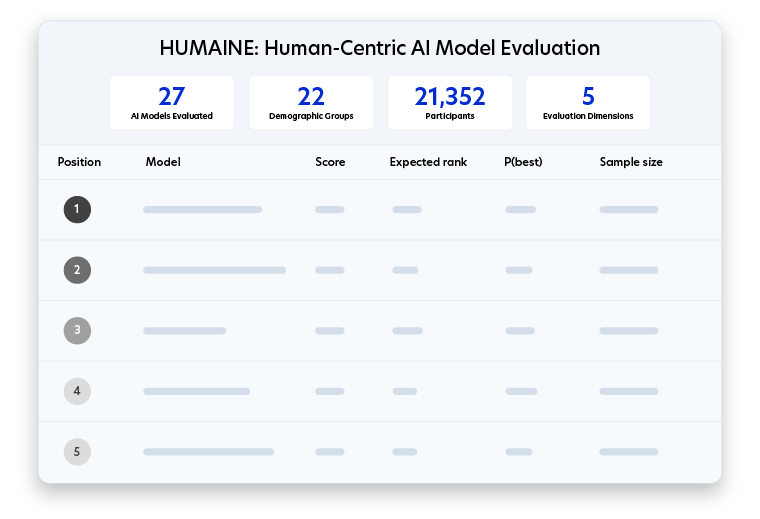

HUMAINE is a human-preference leaderboard that evaluates frontier AI models based on real-world usage. Unlike traditional benchmarks that mainly track technical performance, HUMAINE captures how diverse users actually experience AI—across everyday tasks, trust and safety, adaptability, and more.

By combining rigorous methodology with feedback from a representative pool of real people, HUMAINE offers the insights model creators and evaluators need to understand not just which model performs best, but why. Updated regularly, it provides a dynamic view of model strengths, weaknesses, and user satisfaction.

Explore the leaderboard

HUMAINE Leaderboard FAQs

HUMAINE is designed for AI labs, model creators, and evaluators who want to understand how their models perform in real-world contexts. It’s also useful for researchers, policy makers, and practitioners interested in human-centered AI evaluation.

Results are refreshed as new models are added and new data is collected. This ensures the leaderboard reflects the latest performance trends across the AI ecosystem.

You can explore the full HUMAINE leaderboard data—including demographic and task-level analysis—on our dedicated app. Datasets are available here; our announcement blog and a detailed paper will follow soon.

Prolific brings deep expertise in human-centered research and access to a diverse, representative pool of verified participants. This ensures that evaluations are fair, reliable, and grounded in real user experience.

An AI evaluation leaderboard ranks artificial intelligence models based on specific benchmarks. The HUMAINE leaderboard is unique because it uses statistically rigorous, multi-dimensional human feedback to measure real-world performance—like reasoning, trust, and communication style—rather than just technical test scores.

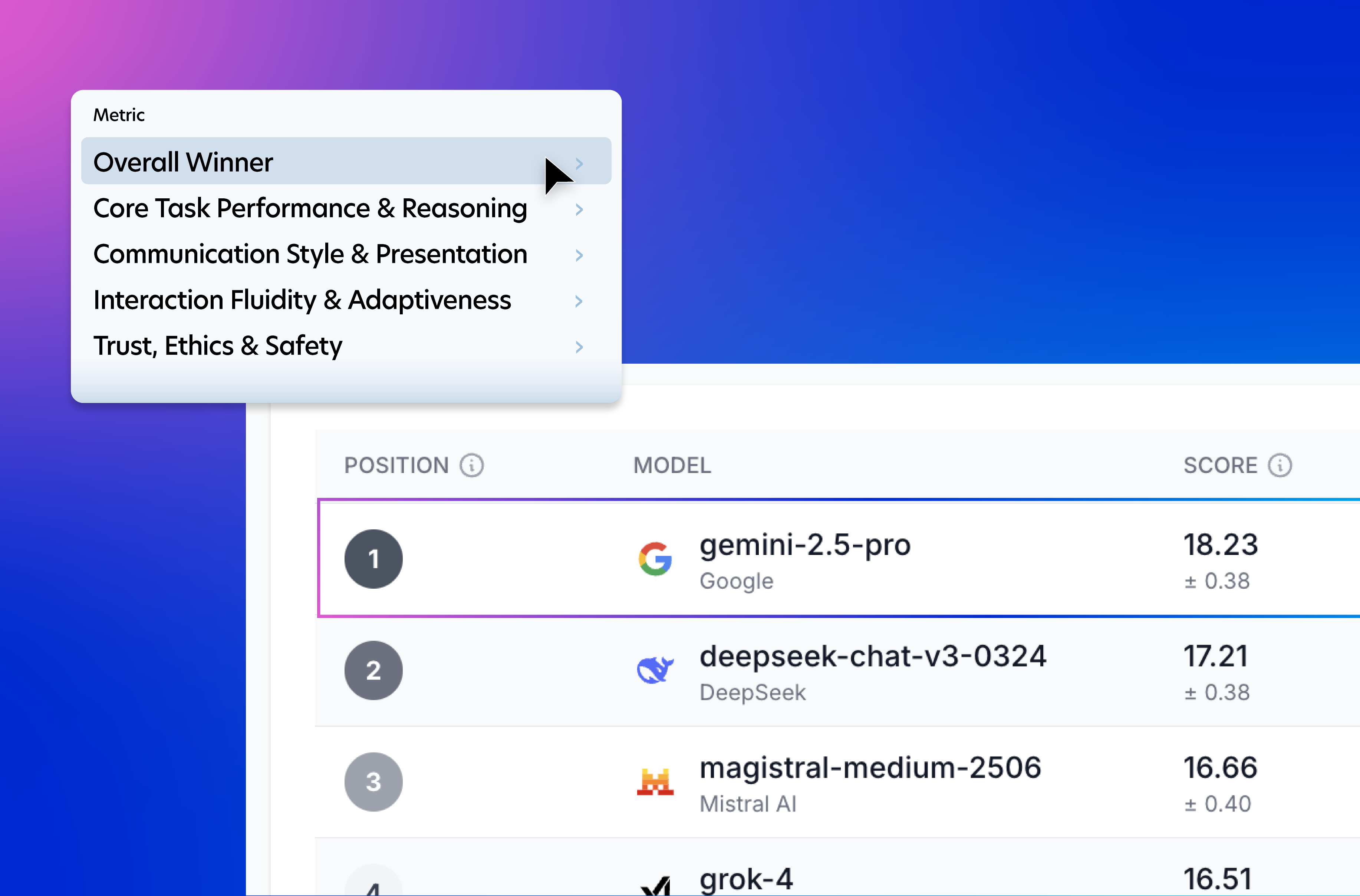

Based on the HUMAINE human-centered leaderboard, Google's Gemini 3 Pro is currently the overall winning AI model. Evaluated by real people across diverse demographics, it consistently ranks number one for overall preference, outperforming other frontier models in core task performance.