The human intelligence layer for frontier AI

Trusted by the leaders in AI

Why AI labs and Enterprises choose Prolific

A unified infrastructure to design, launch, and manage human data workflows at frontier speeds.

Specialist human intelligence, profiled for advancing models

Behind every evaluation is a carefully profiled, verified human. We combine demographic, behavioral, and domain-level profiling with ongoing verification to surface people who deliver reliable human data, when you need it.

Automate human data collection

We build custom evaluations from the ground up

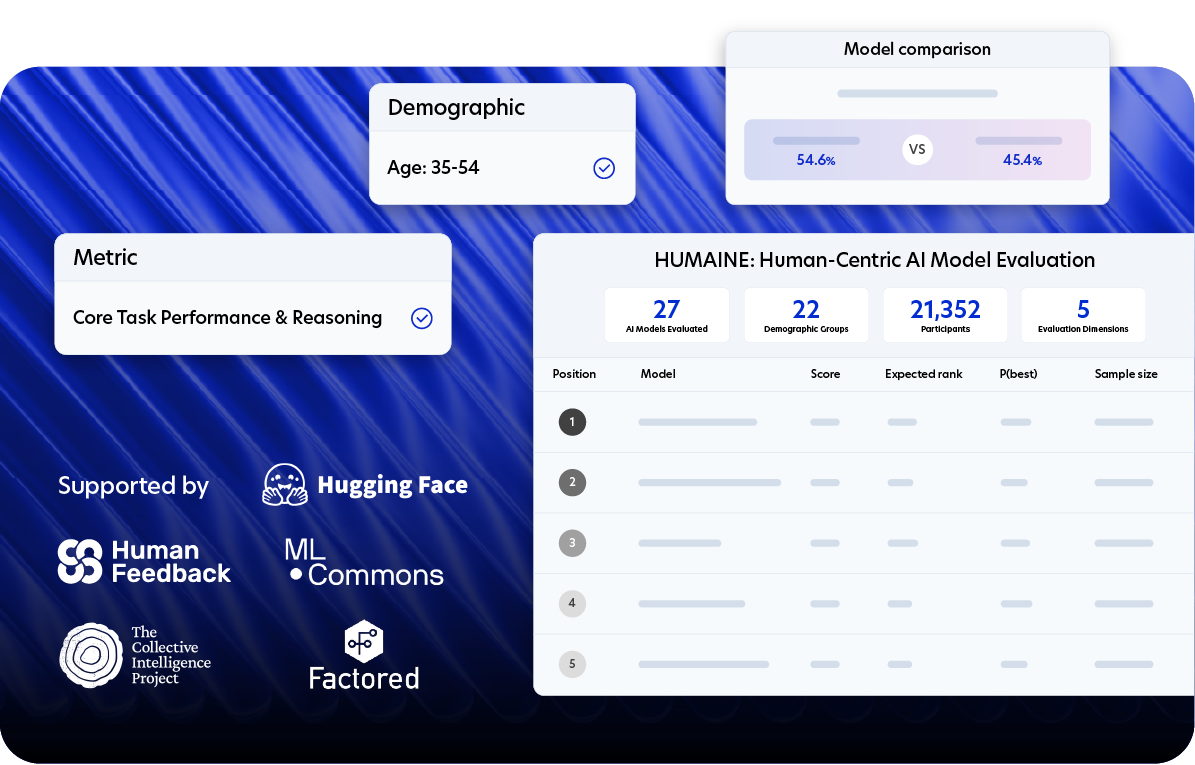

HUMAINE is a public benchmark and evaluation framework for assessing model behavior in real-world, human-facing conditions. Developed through peer-reviewed research and ongoing empirical work, it allows model builders to run human-centered evaluations - supporting systematic comparison, diagnostics, and iteration on deployed models.

Inside Deliberate Lab: Group Decision-Making with AI

We discuss LLM simulacra that mirror human biases or identify optimal leaders, why AI facilitation can work better with less instruction, and the challenges of running synchronous research online. We also explore a negotiation study where humans and LLMs achieved similar outcomes through very different strategies, and what that means for deployment.

Read how Prolific collaborates with industry innovators

Peer-reviewed research using data from Prolific.