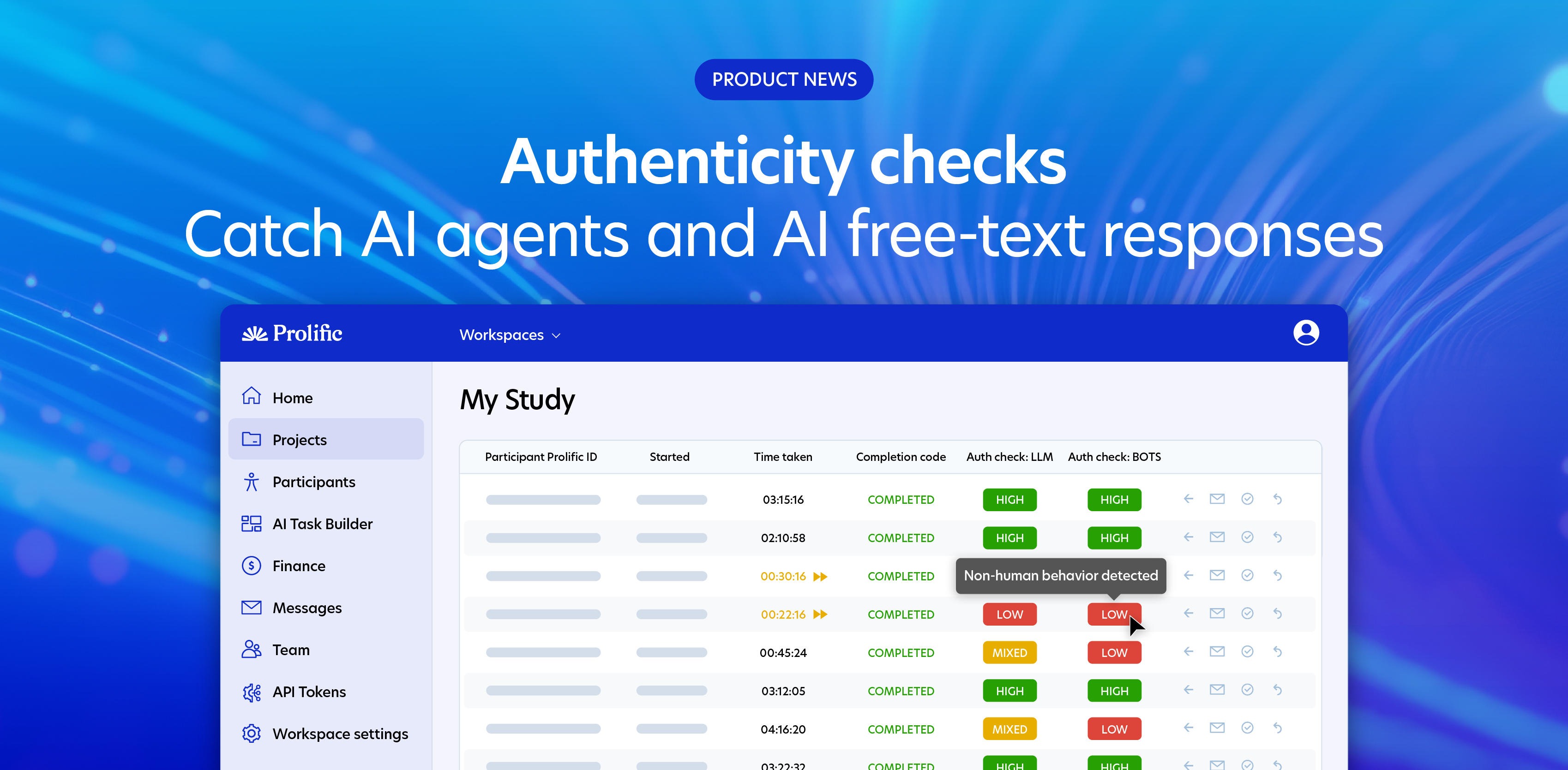

Introducing authenticity checks: Detect AI agents and responses in your research with exceptional accuracy

As AI becomes increasingly prevalent in our digital lives, the research world faces a critical inflection point. Pure, authentic human insights have never been more valuable, or more threatened.

That's why we've built authenticity checks: a powerful new tool that automatically detects AI-generated free-text responses with 98.7% precision, and AI agents with 100% accuracy in testing.

The challenge of AI in primary data collection

Large language models like ChatGPT have become increasingly accessible and sophisticated, which means researchers face a growing challenge: how can we distinguish between authentic human responses and AI-generated answers?

We’ve directly observed how AI threatens research validity across the industry, seeing a rise in participant AI-generated responses.

At Prolific, we’re committed to preserving authentic primary data collection. That’s why we've developed a new behavioral detection system that goes beyond surface-level content analysis.

Introducing new authenticity checks

Authenticity checks use advanced behavioral analysis to detect when participants may be using AI tools (like ChatGPT) or running an AI agent to answer your questions, instead of providing genuine responses.

It’s difficult to tell if a response is authentic just by reading it. These checks look for suspicious behavioral patterns rather than analyzing the text itself, adding a more accurate layer of defense on top of manually reading responses.

They’re available now at no extra cost, as part of our commitment to high data quality standards. Sign up or log in to try them now.

Two kinds of authenticity checks to give you total confidence in your results

There are two kinds of authenticity checks for you to use, depending on your study or tasks.

1. LLM checks

These detect participants using AI to help answer free-text questions with 98.7% precision. They look for 15 different behaviors, like copy-pasting and tab-switching, to determine whether participant answers are authentic or not.

LLM authenticity checks are available to use with Qualtrics and Prolific’s AI TaskBuilder.

These checks only work for free-text questions, and won’t work on other question types. They also will unfairly flag responses if the task requires participants to use or refer to external sources to complete your free-text questions.

2. Bot checks

These identify when an AI agent or bot is answering your study, with 100% accuracy in testing. They look for non-human behaviors or automated environments across your whole set of questions, working with any question type.

In testing, these were the leading method of detecting AI agents versus various other methods on the market, with a perfect record of separating humans from AI agents.

Bot authenticity checks are available to use with Qualtrics.

How to set up authenticity checks for Qualtrics

- Insert a Qualtrics link when setting up a study in Prolific, and the option to include authenticity checks will appear.

- Select which authenticity checks make sense for your study: Bot or LLM, or both. Then insert the provided JavaScript into Qualtrics.

- As each participant answers, our model looks for real-time behaviors that suggest their response isn’t authentic. It runs many different checks and uses machine learning to weigh up the likelihood of an inauthentic response. When the model has high confidence, it’ll flag the response.

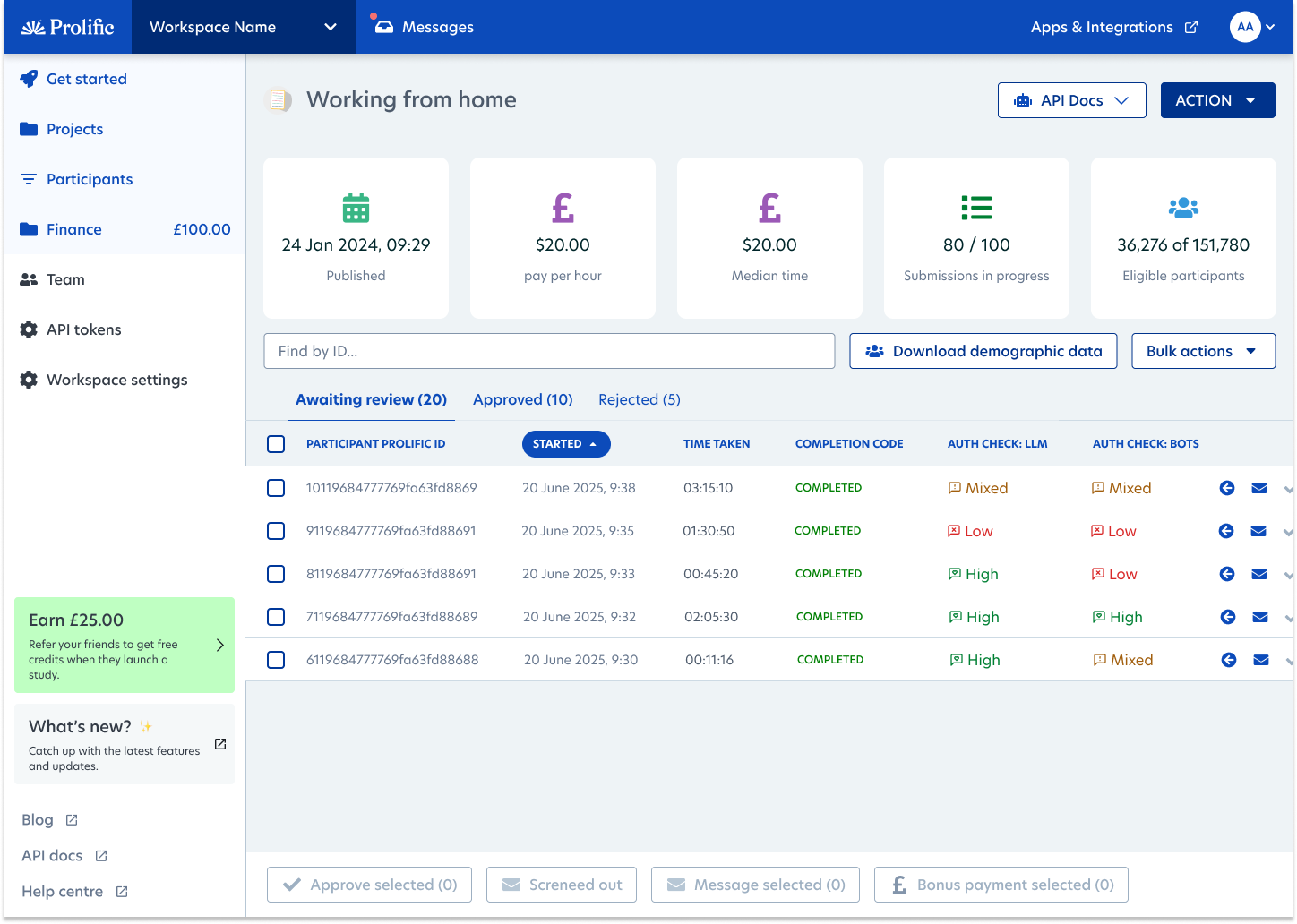

- When participants submit responses, Prolific will show you which ones have been flagged for LLM or Bot checks in the two authenticity check columns. You’ll receive the following flags:

- High (green): All responses have high authenticity and have passed the check

- Low (red): Low authenticity patterns detected and the participant has failed the check

- Mixed (orange): For LLM checks, this means some questions were flagged (e.g., 2 out of 4 seemed inauthentic). For Bot checks, “mixed” means there weren’t enough signals to confirm high or low authenticity.

- These flags mean you can easily see and sort through flagged submissions, saving countless hours of manual checks.

Given the very high precision of authenticity checks, responses flagged for low authenticity may be rejected, as long as LLM checks have been used fairly. Read our complete guide to authenticity checks.

Easily sort responses, with highly accurate checks

We strive to maximize effective detection while remaining fair to participants. Our extensive internal testing shows that authenticity checks are highly precise:

- When our Bot authenticity check flags AI agents or bots, it’s correct 100% of the time based on our testing, so you can feel confident excluding low authenticity responses from your submissions.

- When our LLM authenticity checks flag answers as AI-generated, it's correct 98.7% of the time, offering a reliable way to sort, double-check, and remove responses. With a low false positive rate (0.6%), there is minimal risk of incorrectly flagging authentic participants.

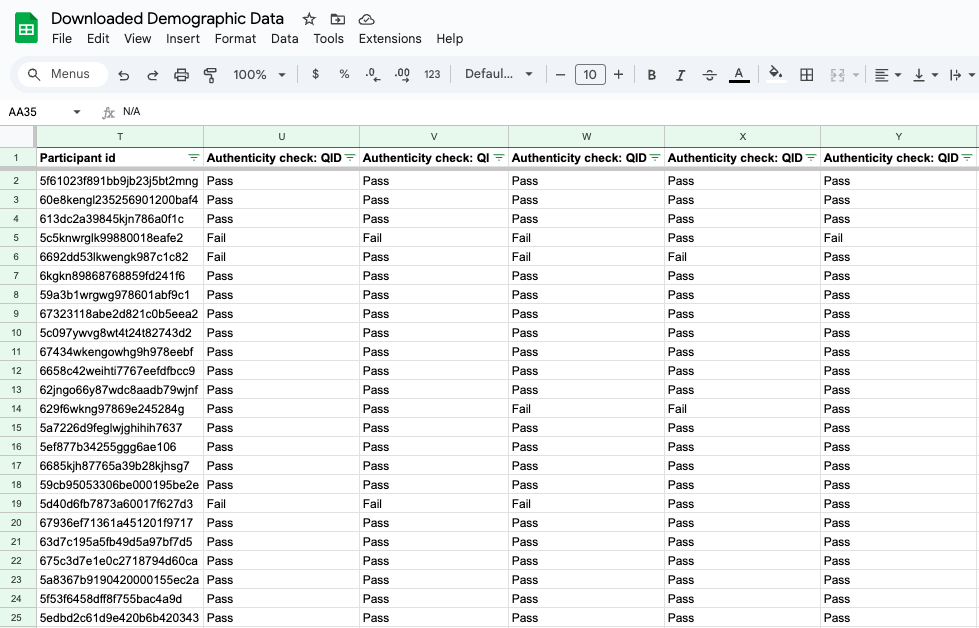

If you have multiple free-text questions in your survey, use the ‘Download demographic data’ feature on the submissions page to see which questions were flagged for AI use.

Which platforms support authenticity checks?

As we build out bespoke integrations with each platform, for now authenticity checks are compatible with projects designed in the tools most commonly used alongside Prolific:

- Qualtrics (both Bot and LLM authenticity checks supported)

- Prolific's AI Task Builder (LLM authenticity checks supported and will run automatically for every set of tasks)

Over time, we’ll expand compatibility with additional platforms based on your feedback.

Best practices for implementation

Whilst we already tell participants not to use AI on Prolific (unless a task instructs them to do so), we’ve found it’s best to remind them in the question itself. Our internal tests found that adding clear reminders reduced AI usage by 61%, making this a simple but effective first line of defense.

Learn more about best practices around authenticity checks.

Sign up to Prolific to try authenticity checks today.

Want to learn more about Prolific’s approach to data quality?

Find out about all the things we do at Prolific to tackle bots and AI in online research.

Read about our full multi-layered approach to ensuring we deliver high quality data, including ID verification, test studies, bot detection, and more.

Watch our VP of Product, Sara Saab, introduce our multi-layered data quality measures in 90 seconds: