Prolific vs. User Interviews: choosing a participant recruitment platform in 2026

Research teams often evaluate participant recruitment tools based on speed, reach, and audience fit. Increasingly, structural factors like independence, flexibility, and long-term risk also play a role.

Recent changes in the research tools market, including User Interviews becoming part of UserTesting, have prompted many teams to revisit how they source participants. Not because existing tools no longer work, but because the context around them has shifted.

Prolific and User Interviews are both widely used for recruiting participants across academic, commercial, and B2B research. While they solve similar problems, they are built on different models, and those differences matter more as research stacks become more complex.

This comparison looks at how Prolific and User Interviews differ today, and what those differences mean for teams deciding how to run research in 2026.

The new landscape: independence and consolidation

Participant recruitment does not exist in isolation. It sits alongside survey tools, testing platforms, analytics, and internal research operations. As a result, the structure of a recruitment platform increasingly shapes how easily it fits into a broader workflow.

One of the key differences between Prolific and User Interviews is how they relate to the rest of the research stack. Prolific is designed to operate independently of the rest of the research stack, while User Interviews provides participant recruitment now under the ownership of UserTesting's wider insights platform.

The acquisition reflects a broader trend toward consolidation in the research and insights industry. Platforms that were once standalone are increasingly becoming components within end-to-end ecosystems, designed to work most closely with tools from the same provider instead of any tool.

For research teams, particularly those running B2B or enterprise studies, this raises a practical question early in the decision process: Do I want participant access that is tied to a specific platform, or infrastructure that remains usable across my preferred tools and workflows?

That question does not have a single correct answer. But it sets the frame for the rest of the comparison, including tool compatibility, data quality, pricing, and procurement considerations.

Core positioning and origins

Prolific and User Interviews were built to solve similar problems, but they originate from different philosophies about how participant recruitment should work.

Prolific

Prolific was founded in 2014 at the University of Oxford by researchers who needed a more reliable way to recruit participants for academic studies. From the beginning, the focus was on data quality, fair participant treatment, and infrastructure that could support many types of research without dictating tools or methods. That core mission has remained consistent as Prolific expanded beyond academia into commercial research, product research, and AI training and evaluation.

Today, Prolific operates as an open, tool-agnostic participant infrastructure. Researchers can use it alongside any survey tool, experiment platform, or research workflow without committing to a specific ecosystem. This design reflects the reality that research teams often use multiple tools, change vendors over time, and adapt their stacks as needs evolve.

User Interviews

User Interviews was built as a recruitment platform for designers, researchers, and product teams. Its strength has historically been in supporting professional and B2B recruitment at scale, with workflows tailored to industry research use cases. Under UserTesting, it now sits within a broader end-to-end insights offering that combines participant recruitment with testing, analysis, and enterprise tooling.

Both platforms continue to support serious research. The distinction lies less in capability and more in structure: One is an independent infrastructure that works across ecosystems. The other is increasingly part of a single, integrated insights stack. Understanding that difference helps teams assess which model best fits their research strategy in the years ahead.

Research types and tool compatibility

Modern research teams rarely rely on a single tool. Surveys, usability testing, interviews, and experiments are often run across different platforms, sometimes within the same project. As a result, compatibility and interoperability have become central considerations when choosing how to source participants.

Prolific

Prolific works with any research method and any tool. You can recruit participants for surveys, experiments, interviews, or longitudinal studies, and run those studies in the tools they already use. Participant access is not tied to a specific testing or analytics platform, allowing teams to adapt their workflows over time without changing how they recruit.

User Interviews

Historically, User Interviews has also supported a wide range of research types and tools. The acquisition by UserTesting introduces a period of transition, where long-term integration and interoperability decisions are still taking shape. While tighter integration within a single insights platform may benefit some teams, it can also reduce flexibility for those who rely on a more distributed research stack.

This distinction becomes more important as research operations mature. Enterprise and B2B teams often work across multiple vendors, collaborate with external agencies, or need to meet internal procurement requirements that limit dependency on any one platform. In these contexts, the ability to source participants independently of the rest of the research stack can simplify operations and reduce risk.

As research tools continue to evolve, flexibility is less about convenience and more about resilience. Choosing participant infrastructure that remains compatible across methods and platforms helps teams stay adaptable as their needs change.

Participant pool size, targeting, and quality

User Interviews

User Interviews operates a large participant network of around six million participants. This scale supports broad reach and fast matching across many professional profiles. Participants are typically invited to projects based on algorithmic matching and likelihood of qualifying, which can work well for common roles and generalized B2B criteria.

Prolific

Prolific maintains a smaller, more tightly curated participant pool of over 200,000 active participants. Rather than prioritizing scale, Prolific emphasizes verification, data quality, and precise targeting. Participants complete detailed, pre-verified profile questions, allowing researchers to filter audiences before a study is launched. Only participants who meet those criteria are shown the study, reducing reliance on post-hoc screening.

This distinction matters as concerns grow across the research industry about bots, AI-generated responses, and low-engagement professional respondents. In response, Prolific focuses on identity verification, anti-fraud measures, and participant behavior monitoring to support replicable, high-integrity research.

For teams running B2B studies, the trade-off is not simply size versus speed. It is about whether access to a very large pool or tighter control over who sees and enters a study better supports the quality requirements of the research.

Speed of recruitment

Prolific

On Prolific, many studies return complete datasets within two hours on average. Speed is driven less by volume and more by pre-verification, targeting precision and how active participants are. When participants already meet the required criteria, researchers can launch studies without extensive screening or iteration. Unlike competitors, Prolific only reports on the number of active participants who've taken studies in the past 90 days, as opposed to how many participants have used Prolific ever.

User Interviews

User Interviews states that recruitment timelines vary based on audience specificity and study requirements. According to its published guidance, some studies can recruit participants within a few days, while more specialized or niche professional audiences may take longer to source.

As with any recruitment model, timelines depend on how narrowly defined the audience is and how many participants qualify once screening begins. Speed is closely tied to quality controls. Pre-verified profiles, clear eligibility criteria, and participant engagement all affect how quickly a study can be completed.

Custom panels and longitudinal research

Prolific

Prolific supports longitudinal research by allowing researchers to recontact the same participants across multiple studies. This enables cohort-based research, follow-up waves, and long-running projects without rebuilding audiences from scratch. Because participants are already verified and profiled, researchers can maintain continuity while adjusting study design as needed.

User Interviews

User Interviews also supports recontacting participants and managing ongoing research relationships. Under an integrated insights platform, longitudinal research may increasingly be managed alongside testing and analysis workflows, which can suit teams already committed to that ecosystem.

For B2B and enterprise research, the key consideration is control. Some teams benefit from deeply integrated workflows, while others require the flexibility to run longitudinal research across multiple tools, vendors, or agencies. In those cases, participant infrastructure that remains independent of the rest of the stack can simplify coordination and reduce friction over time.

Pricing models and cost predictability

User Interviews

User Interviews uses a credit-based pricing model. Its lowest tier is offered on a pay-as-you-go basis, while higher-volume plans operate on subscription pricing billed annually. Researchers purchase credits or sessions, which are then exchanged for participant recruitment depending on audience type and study requirements. For teams new to the platform, understanding total study cost can require converting credits or sessions into an effective per-participant price.

Prolific

Prolific uses clear, per-participant pricing. Researchers set a payment for participants’ time and pay a transparent platform fee on top. Costs are pay-as-you-go and visible upfront, making it easier to calculate total study spend before launch.

For research operations and procurement teams, this difference can matter. Clear pricing reduces the need for conversion, estimation, or explanation, and can make it easier to forecast budgets across multiple studies and time periods.

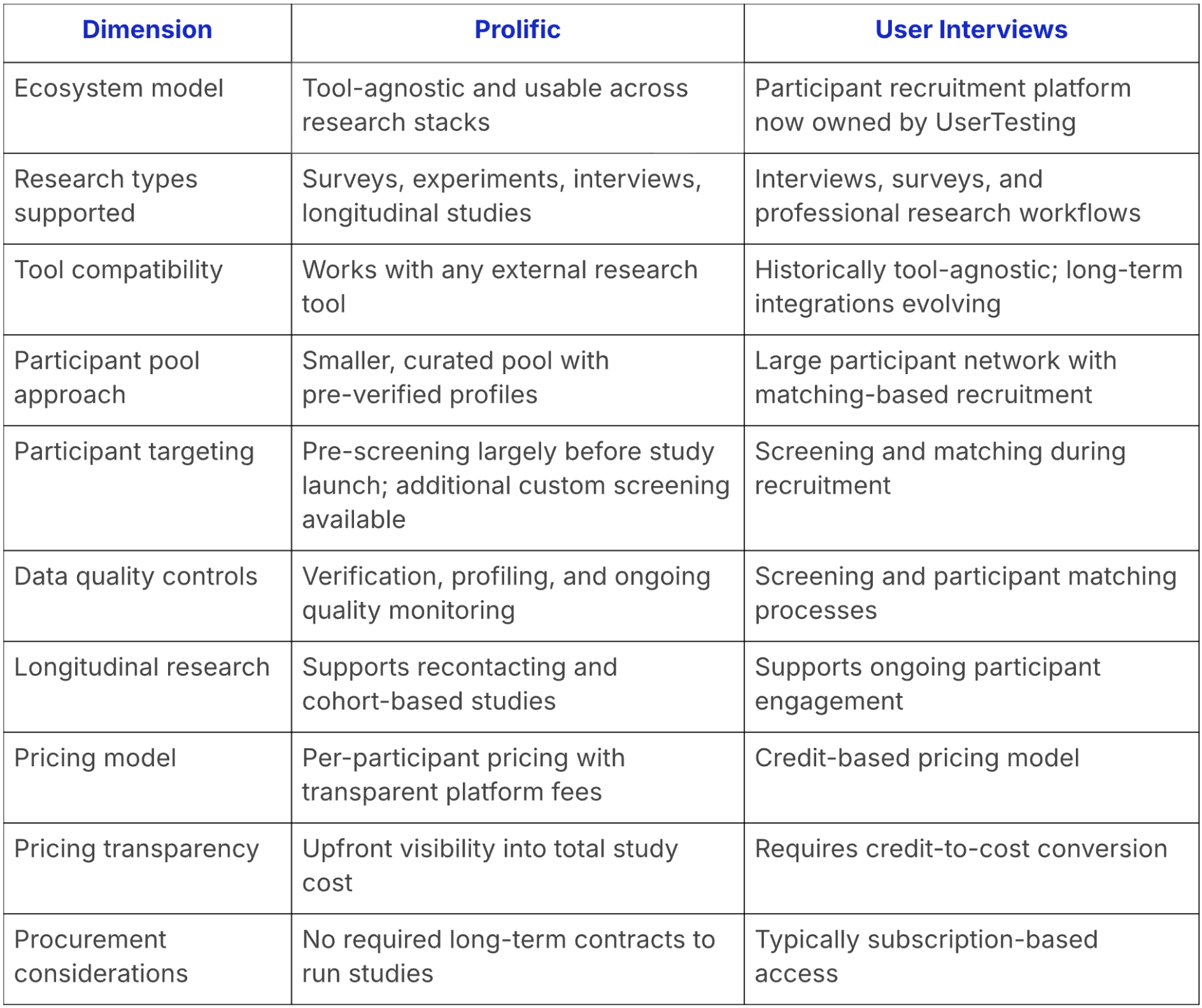

The table below summarizes some of the structural differences discussed above.

Choosing the right platform in 2026

As research practices continue to evolve, choosing a participant recruitment platform is no longer just a question of reach or features. For many teams, it has become a strategic decision about flexibility, risk, and long-term fit.

When evaluating options in 2026, research teams may want to consider the following factors.

Independence and ecosystem fit

Some platforms are designed to operate as part of a broader insights ecosystem. Others are built to remain independent and usable across tools and workflows. The right choice depends on whether a team prefers tightly integrated stacks or the freedom to adapt their research setup over time.

Research diversity and future use cases

As B2B research grows in importance, teams often expand into new methods, audiences, and study designs. Participant infrastructure that can support a wide range of research types without requiring changes to the rest of the stack can make that evolution easier.

Data quality and trust

Concerns around low-quality responses, automation, and AI-generated data are increasing across the industry. Platforms differ in how they verify participants, monitor behavior, and protect data integrity. For many teams, these safeguards are becoming as important as speed or scale.

Cost structure and predictability

Pricing models shape how research programs scale. Transparent, usage-based pricing can offer flexibility, while subscription-based models may suit teams with stable, high-volume needs. Understanding how costs change as research expands is key to avoiding surprises.

Procurement and vendor risk

For enterprise teams, participant recruitment is rarely evaluated in isolation. Ownership structure, vendor dependencies, and long-term roadmap alignment increasingly factor into procurement decisions. Platforms that remain independent of competing tools can reduce perceived risk and simplify approval processes.

There is no single right answer for every team. The acquisition of User Interviews reflects a broader shift in the market, and different research organizations will respond in different ways. What matters most is being intentional. Understanding how participant recruitment fits into the wider research ecosystem helps teams make choices that support both current needs and future plans.

If you're considering Prolific for consumer or B2B research, learn more about our consumer and professional audiences.