Balancing transparency and security in authenticity detection

In our recent webinar, Keeping research real in the age of AI, you had plenty of questions about how we're keeping participant responses authentic and reliable. You asked things like: "How exactly do authenticity checks work?" and "What triggers a false positive?" You also wanted to better understand the systems we use to identify patterns of suspicious activity and keep your research data trustworthy.

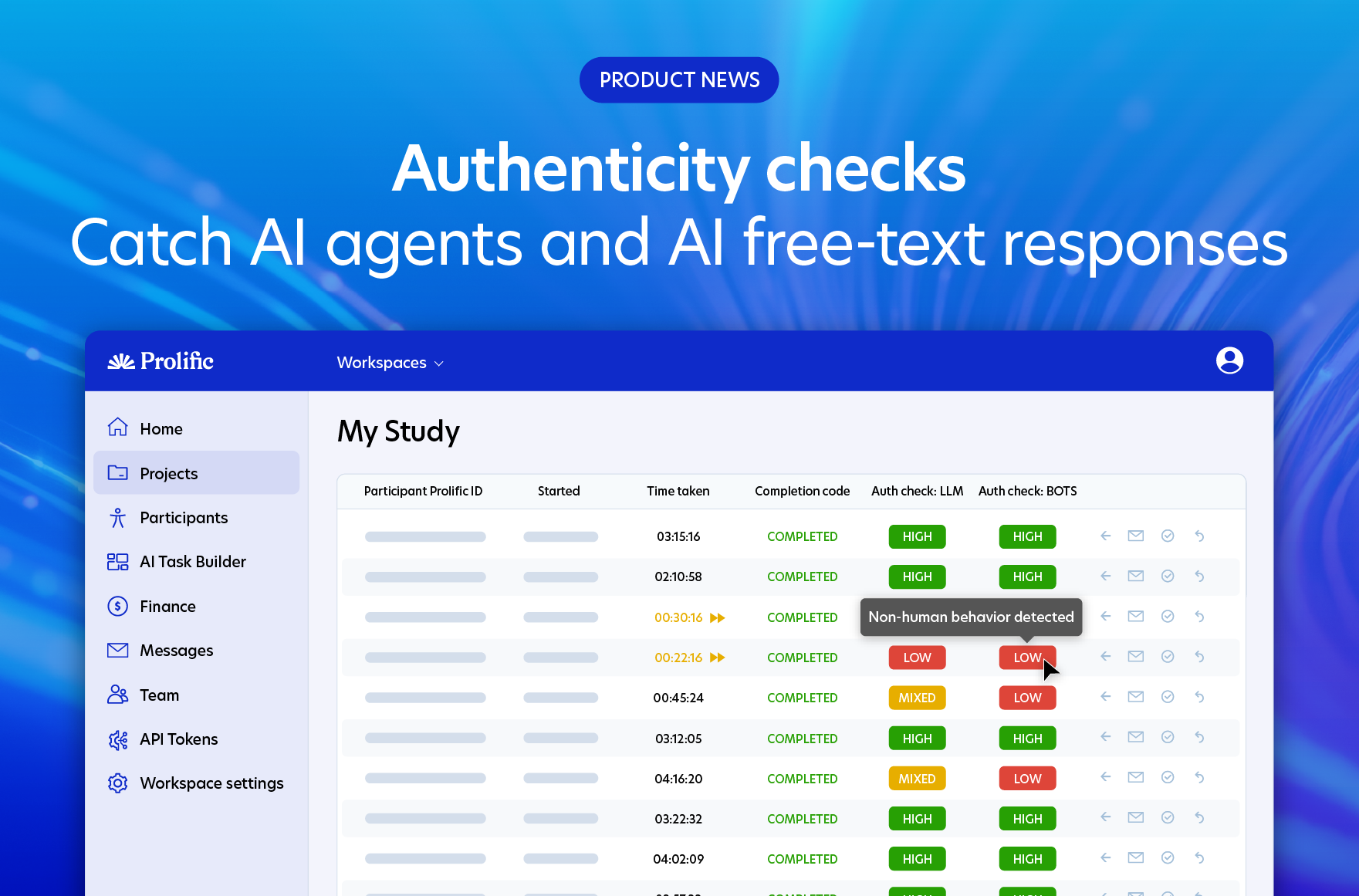

Authenticity checks, for example, help confirm whether participants have genuinely answered free-text questions or leaned on AI for help, with an accuracy rate of 98.7%. While Protocol, our broader data-quality system, combines more than 50 signals to spot and prevent patterns of suspicious participant behavior over time.

Your most pressing questions, answered

We answered many questions in the webinar itself. And below, we've answered further questions about how our checks operate in real scenarios, addressing common misconceptions while clarifying exactly what our processes mean for you and your participants.

We share as much as we can about how we maintain authenticity without compromising participant privacy – enough to build trust without giving away the signals that keep the system secure

1) Are participants being flagged for using VPNs when they didn't know they had them? For example, some phone plans automatically apply a VPN.

We know that banning someone just because they're using a VPN would be unfair. Many people use VPNs for legitimate privacy reasons, and as you mentioned, some don't even realize they have one active.

Our system, Protocol, doesn't work that way. It's designed to weigh up the likelihood of a participant being a bad actor by looking at the results of over 50 different checks. Using a VPN will be noted by Protocol, but it won't result in a ban without other signals that make us highly confident the participant is acting dishonestly.

Think of it like fraud detection at your bank. One unusual transaction doesn't trigger an account freeze, but a pattern of suspicious activity might.

2) How does Prolific identify participants who are 'usually' properly participating, but may occasionally "outsource" a specific task to an LLM?

This is where real-time detection shows its worth. Our authenticity checks look at individual free-text responses within each survey. As participants answer, our model analyzes behaviors that suggest a specific response isn't authentic, right then and there.

It means we can identify when someone who typically participates genuinely decides to use AI for your particular study. We're not just looking at their overall account history as much as we're examining the authenticity of each response as it happens.

3) Sometimes researchers want to encourage LLM use, for example, when studying human-AI interactions. How can researchers signal that participants shouldn't be penalized for AI use when it's encouraged?

You have full control here. As a researcher, you choose whether to use authenticity checks in your study, and you decide whether to reject or approve submissions based on the results.

If your study encourages LLM use, it’s best not to include authenticity checks. If you're curious about the results and decide to run them anyway, please don't reject participants based on those authenticity check results. The choice is entirely yours.

4) There's a perception that a rejection means a participant gets banned. Is this correct?

Without a doubt, this question is one of the biggest misconceptions we encounter. A single rejection from authenticity checks won't cause a participant's account to be put on hold, unless they've already reached a certain percentage of rejections overall.

We understand that mistakes happen, edge cases exist, and sometimes legitimate participants might trigger our systems.

That's why we don't make account-level decisions based on isolated incidents. Participants are almost never banned for one single action. An outright ban based on a single action would only happen when it's definitive fraud, for example uploading a fake ID. Instead, we use a system of many checks and balances that builds up a picture of how trustworthy a participant is over time.

Looking at the bigger picture

Protocol weighs dozens of signals to identify patterns that suggest fraudulent behavior. It's not about catching someone in one moment. Think of it more as identifying consistent patterns that indicate someone isn't participating authentically.

Taking this approach allows us to protect both researchers who need genuine data and participants who are acting in good faith. It's not perfect, but it's designed to be fair while keeping your research authentic.

We know you'd love even more transparency about how these systems work. We're always looking for ways to share more without compromising effectiveness. As AI detection evolves and bad actors develop new tactics, we'll keep refining this balance between openness and security.

Because at the end of the day, we're all working toward the same goal: research you can trust, powered by authentic human insights.