Start your project

Start by setting up a new study in your workspace. Name it clearly and describe the task the participants need to complete.

Sign up and start instantly. No contracts, no minimums - just transparent pay-as-you-go pricing.

Connect your tasks

Host your tasks on any data collection interface - yours or third-party, across text, image, audio and video.

Add task URLs via our UI or API. Use Taskflow to dynamically distribute thousands of unique tasks.

Or create text annotation and evaluation tasks directly with AI Task Builder.

FEATURES

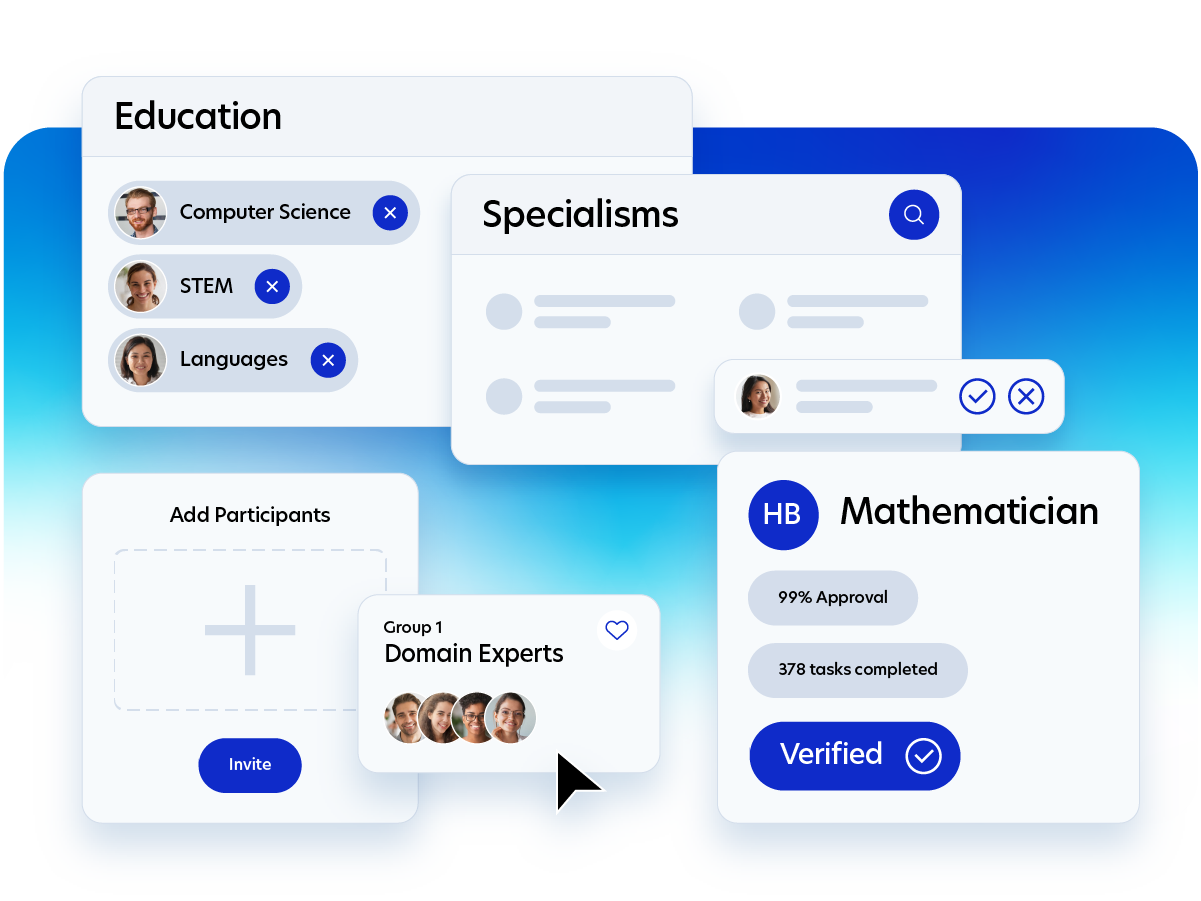

Find your audience

Target the most relevant participants for your project.

Use our 300+ prescreeners, or choose specialist participants verified for their AI evaluation skills or domain expertise.

Set reward rates and launch

Set participant payments based on task complexity, time, and expertise required. Use in-app calculator and recommended rates for guidance.

Add funds and start collecting data in minutes.

Monitor submissions in real-time to track project completion.

FEATURES

Need end-to-end support?

Get complete datasets and let our managed services team handle participant sourcing, quality assurance, and project management - so you can focus on model development.

How leading AI teams use Prolific

From frontier labs to AI-first startups, teams rely on Prolific's human intelligence layer to build safer, more capable models.

How our customers use Prolific

Trusted by AI/ML developers, researchers, and leading organizations in academia and across industries.

Questions?

Teams building leading frontier AI models and AI products use Prolific for model evaluation, RLHF data collection, safety testing, and UX research. Our platform works with any annotation tool that generates URLs, supporting all data modalities - text, image, audio, and video.

We give you direct access to 200,000+ verified participants through an API-first platform - no hired annotation teams or black-box operations. Host tasks on your infrastructure, access people and experts from all walks of life, and get results in hours instead of weeks.

You will start to see data typically arrive within hours. Simple tasks can complete on the same day, while specialized domain expert tasks may take a few days depending on requirements.

We manage quality by maintaining a bar for entry and participation on our platform. We have 200,000+ active participants on the platform, with over a million on the waitlist. Protocol, our AI-powered quality monitoring system, runs 40+ checks to verify identity during onboarding and analyze behavioral data when they contribute data to ensure only verified participants are on the platform. You have full control over submission approval and can reject submissions that show negligent behavior (e.g. failed attention and comprehension checks). For more details on approvals and rejections, check out our help center article.

Our 300+ prescreeners lets you access 200,000+ participants from our Global Crowd. These prescreeners cover a range of profile information including demographics, their personal and professional background, and their Prolific submissions history among other things. Additionally, you can also access AI Taskers and Domain Experts - participants verified for their skills in AI training and evaluation tasks and subjects like STEM, programming, healthcare and others.

Yes. Many teams using Prolific use their custom annotation tools or third-party solutions that generate a task URL - which you can connect participants with using our UI or API. Alternatively, you can also use AI Task Builder for text annotations and evaluations.

Our pricing is straightforward and transparent:

- When you’re accessing the platform directly, you pay a participant reward that you set, plus a platform fee.

- When you need managed services, pricing is calculated based on your project scope and requirements.

For more details, visit our pricing page.

Typically, we recommend you pay participants at least £9.00 / $12.00 per hour, while the minimum pay allowed is £6.00 / $8.00 per hour. For tasks requiring specialized skills (like experience in AI annotation tasks, coding experience, or advanced STEM degrees), we recommend higher compensation to reflect their expertise and the complexity of the tasks. Fair compensation leads to better data quality and higher engagement, especially when publishing complex tasks or studies. For more details, visit our pricing page.